Riding the wave of misinformation in the ocean of crisis

Arushi Sethi /

Source: Photo by CJ Dayrit on Unsplash

The human race is in the middle of the biggest medical crisis this planet has faced in its existence. The constant buzzing of my phone and its endless supply of unauthorised advice on how to face this hazard reinforces my fears. I am in quarantine. I panic and begin to humbly write this paper.

What particularly concerns me are those spreading health advice. This is often done with the best of intentions: it’s a natural response to a scary situation to pass on advice that you think might help protect your friends and family. But if that information turns out to be inaccurate, you risk doing more harm than good. Falsehood flourishes on oblivion and an absence of context. This very oblivion in the times of crisis can be lethal.

“All the truth in the world adds up to one big lie.”

– Bob Dylan

We humans are social creatures. We use stories as a medium to form sense of our world and to share that understanding with others humans. Stories are the signal within the noise. So powerful is the human impulse to detect story patterns, that we see them even when they are not necessarily there.

Is there a single point of truth?

- What you believe is true (for you); what I believe is true (for me).

- Truth is subjective.

- Truth is in the eye of the beholder.

- Different strokes for different folks.

The virtual evolution has fundamentally mutated the way information is produced, communicated and distributed. We are the pursuers, we are the ones who deception is for. Sadly we are those that contribute to the very game of Chinese whispers of data and information. Have you ever wondered where we as designers lie in the aftermath of the post truth era?.

Gossipy titbits, paranoid ideas and faux data are a million lightyears away from new. Circulation and deception of content may be a bi-product of our relationship with data. Who owns any of the mass forwards we receive or share? Do we care to verify what we consume or do we just temporarily escape this introspection until we hit snooze on that family WhatsApp group again.

When seeing is no longer believing, how do we establish the truth?

“There’s so much active misinformation and it’s packaged very well and it looks the same when you see it on a Facebook page or you turn on your television. If everything seems to be the same and no distinctions are made, then we won’t know what to protect.” – Barack Obama

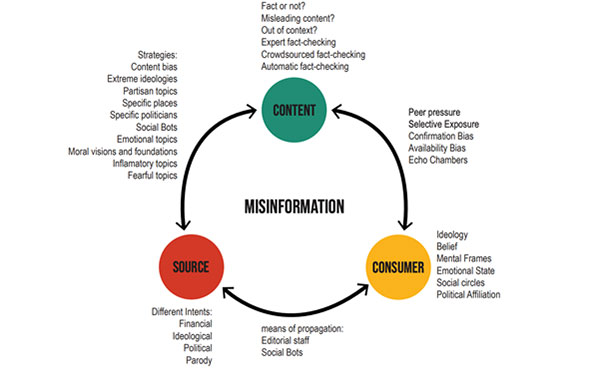

Source: Human-Misinformation_interaction_Understanding_the.pdf

The core differentiator is the intent behind sharing information. We can broadly classify information in these three buckets:

- Mis-information is when false information is shared, but no harm is supposed.

- Dis-information is when false information is knowingly shared to cause harm.

- Mal-information is when genuine information is shared to cause harm, often by moving information designed to remain private into the general public sphere.

Elements of misinformation and their relations

Source: Human-Misinformation_interaction_Understanding_the.pdf

It’s unrealistic to expect users to innately want to grasp the broader context of why a particular story is treated as misinformation. It’s only human after all to search for, interpret, favour, and recall information during a way that confirms or strengthens one’s prior personal beliefs. Often, users aren’t acquainted with how message chains function and therefore, by forwarding an unverified claims / content, they’re contributing to an misinformation wildfire.

“When a thousand people believe some made-up story for a month – that’s fake news. When a billion people believe it for a thousand years – that’s religion, and we are admonished to call it fake news in order not to for the feelings of the faithful.” – Yuval Noah Harari, 21 Lessons for the 21st Century

This makes me wonder…

How might we design experiences that ease the users from being vulnerable with products that scratch their emotional itch?

Photo: Jonathan Kitchen / Getty Images

Human brains crave Dopamine. We are wired to desire more. An instance as mundane as a person liking your shared piece of content can trigger an equivariant chemical reaction to a gamblers high. When this state of emotional vulnerability gets coupled with shocking headlines, a physiological reaction occurs in the presence of stimulus that is terrifying, either mentally or physically. The response is triggered by the discharge of hormones that prepare the human body to either stay and manage a threat or to run away to safety. Our fight or Flight responses get triggered. We begin sharing and oversharing compulsively. It puts users in a loop of receiving – anticipating and discriminating content / data. Simply put, this loop is human behaviour on steroids.

Source: Adobe

Enabling users to scrutinize the authenticity of information they engage with can disrupt this chain reaction. The interfaces we create and give birth to, must allow users to recognize authentic data, search out sources and settle on shrewd choices about the content they further choose to circulate. Information proficiency and media literacy educates individuals on the full truth of information: the story and the other side of the story with the goal that they can be enabled to settle on proactive choices about the data they consume and share.

How can we improve the health of the experiences we design?

Much like the field of medicine, on one hand we designers diagnose problems, and to the contrary we have knowingly or unknowingly created them. To improve the health of our interfaces, we’d have to focus a large chunk of our energies on Designing for transparency and Galvanizing critical thinking from our users.

On what parameters can users judge the authenticity of information received or circulated?

- Provenance: Are they observing the original account/ representative of the article or piece of content?

- Source: Who created the account or article, or captured the original piece of content?

- Date: When was it created?

- Location: Where was the account/ author established, website created or piece of content captured?

- Motivation: Why was the account/ author established, website created or the piece of content captured?

If we implemented these parameters outlined above within the interfaces we design for the longer term , the result would be a drastic increase in communicating with the user what data is being collected and why, also as increased control of what users can opt into and how they will manage the algorithms that shape their encounters with information: moving us faraway from an opaque understanding of “free platform in exchange for data” barter.

PS: However, while it’s important to report on problems and issues within the times of crisis, I feel there’s such tons of good to celebrate in this world that it must be found and promoted even as widely.

Let’s examine how products that the entire human landscape is hooked onto have adapted to solve for this challenge:

Source: https://www.theverge.com/2019/10/21/20925204/facebook-2020-election-interference-prevention-tools-policy-false-misinformation

Facebook Now Deliberately labels false posts more clearly, helps serve as a disclaimer despite of them hosting the content on their platform.

“The same way Google has begun its journey to combat deep fakes, Adobe has recently announced its personal service to spot manipulated pictures and motion pictures through the use of AI. The same organization that pioneered picture and video enhancing is now helping human beings differentiate Photoshopped photos from actual ones.” – MIT Technology Review, February 5th, 2020

Full disclosure

In August 2019, YouTube delivered disclaimer reproduction next to the video player UI so that users could recognize which business enterprise or entity is behind the content they’re watching.

Source : https://9to5google.com/2019/08/27/youtube-labels-government-funding/

Rise of the social conscientious

Guardian has included the date an article was distributed to its social thumbnail, to keep clients from re-sharing old reports mistaking them for current ones.

Source: https://www.theguardian.com/help/insideguardian/2019/apr/02/why-were-making-the-age-of-our-journalism-clearer

Lastly I would like to conclude by stating…

Creating digital experiences comes with responsibility. Whether we have got 5 users or 5 billion, we will be shaping the way people think. Let’s Own that responsibility in order that we, our users, and therefore the field as a whole can grow in a positive and healthy direction. It’s an opportunity not only to grow the quality of experiences we deliver, but also make them user centric at its core. It’s our duty to be mindful of the wave misinformation in the ocean of crisis, to incorporate practices in our design process that restore power, relief and health to our users.

References:

- https://medium.com/artefact-stories/stop-designing-for-delight-start-designing-for-transparency-39113cf8014

- https://misinfocon.com/designing-our-way-to-a-health-information-ecosystem-1efc97fe6000

- https://www.digitaltrends.com/social-media/social-media-sites-good-and-popular/?itm_medium=editors

- https://firstdraftnews.org/wp-content/uploads/2019/10/Verifying_Online_Information_Digital_AW.pdf?x95059

- Kwan, V. (2019) First Draft’s Essential Guide to Responsible Reporting in an Age of Information Disorder, London: First Draft. https://firstdraftnews.org/ wp-content/uploads/2019/10/Responsible_Reporting_Digital_AW-1.pdf

- https://trends.uxdesign.cc/

- https://www.nytimes.com/2019/01/19/opinion/sunday/fake-news.html

- https://uxplanet.org/ux-has-a-fake-news-problem-210e7a8cf3c5

- Human-Misinformation_interaction_Understanding_the.pdf

- Elements of misinformation and their relations

- https://www.technologyreview.com/f/615143/google-ai-deepfakes-manipulated-images-jigsaw-assembler/

- https://uxdesign.cc/designing-for-the-post-truth-era-5867431bc9f6

- https://www.amenclinics.com/blog/sex-drugs-rock-n-roll-smartphones-video-games-the-brain/

- http://sitn.hms.harvard.edu/flash/2018/dopamine-smartphones-battle-time/

- https://www.behance.net/gallery/48769119/Le-Monde-Newspaper-Illustration-4